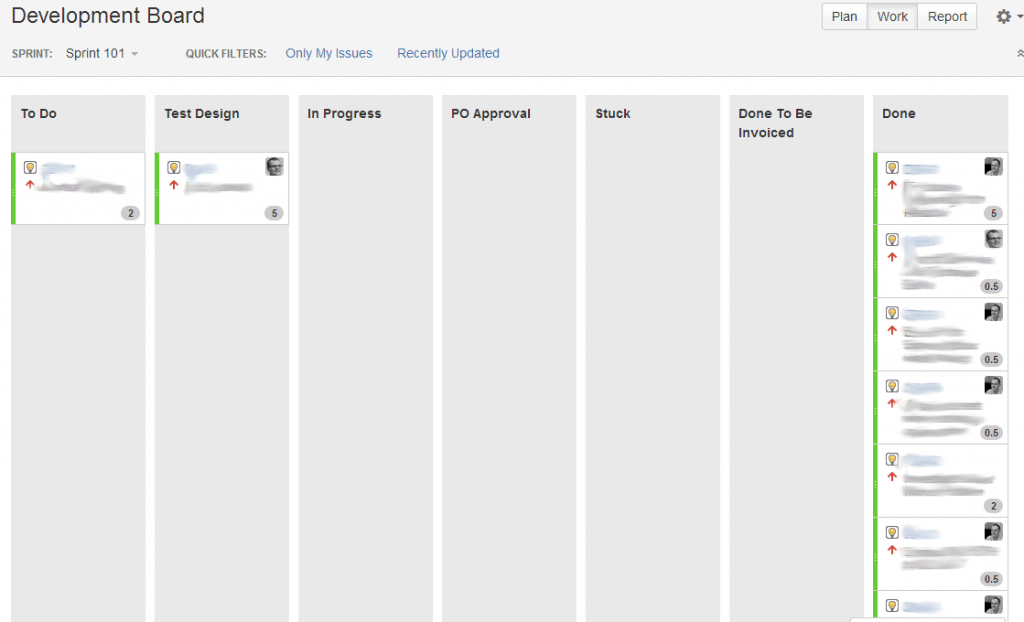

Our approach to Agile testing hasn’t really changed much over the years, although we have got better at formalising it. After seven years of using whiteboards, we recently moved over to a web-based solution (Jira with the Greenhopper plugin), so my piccie below is of our electronic board. I’ve done a bit of blurring for confidentiality!

You can see that our process starts with Test Design rather than “Analysis” or some other such unstructured process. We prefer to try to define our user stories in terms of acceptance tests. Whilst we illustrate this on our board as a separate step, it is more of an affirmation of discipline that a strict series of steps. We find in practice that as we develop we discover and invent new acceptance test. “We” refers to developers, testers, clients, Product Owners and anyone else who shows an interest!

Test Design

Our preferred approach is a fairly standard “As a xxxx I would like to yyyy so that zzzz” as a statement of the user story. We then start to create acceptance criteria in a “given aaaa when bbbb then cccc” format. This flows very nicely into a BDD automated testing approach. We are predominantly NSpec fans rather than SpecFlow. There is no special reason for this other than this is the approach we tried first! SpecFlow is a little wordy for our taste, and since we have more development resource than BA, tester etc, this choice seems to make sense for now.

Tools

As we get into development, we start to look at some other testing processes and tools. For GUI testing, we are Selenium fans. It’s a pretty simple choice and lets us run tests across the various browsers we support. We can also include these (lengthy) GUI tests in overnight automated test runs.

For integration (AKA component) and unit testing we are keen on a language dependant framework such as MSTest, JUnit, PhpUnit etc. We usually couple this with a mocking framework such as Moq or jMock. For iOs (iPhone / iPad) App development we have settled on GHUnit and OCMock for now.

We are also big fans of zaproxy for security testing for our web-based products. Obviously, this is a tool rather than a process, so we have some other security testing tricks up our sleeve which we run through too!

When it comes to performance testing web applications, our favoured tool is JMeter (regardless of the underlying technology of the web application). It’s hard work at first, but worth persevering with!

It’s worth noting that we also look at other tools and processes regularly. In particular, we have been closely following the development of the Telerik Test Studio suite recently. Whilst it is a little pricey and proprietary, it would be good to organise some of our disparate testing into a single location.

Schedule

Once we had started to develop significant automated test suites, we found that they were taking a long time to execute! We currently following this kind of schedule for most of our application development work:

On Check-In

- Automated Unit Test Execution

- Automated Integration (Component) Test Execution

- Automated Acceptance Test Execution

Overnight

- Automated GUI Test Execution

Weekly

- Semi-Automated Performance Test

- Semi-Automated Security Test

- Manual User Experience Testing

- Manual Exploratory Testing

Conclusion

All in all, we keep our testing tight, focussed and heavily automated. We find our tool and schedule mix throws up potential problems nice and early. We are always using our Sprint Reviews and retrospectives as a mechanism for improving our processes and introducing new Agile Testing ideas into our process.